Neural Artistic Style Transfer with Conditional Adversarial Networks

Abstract

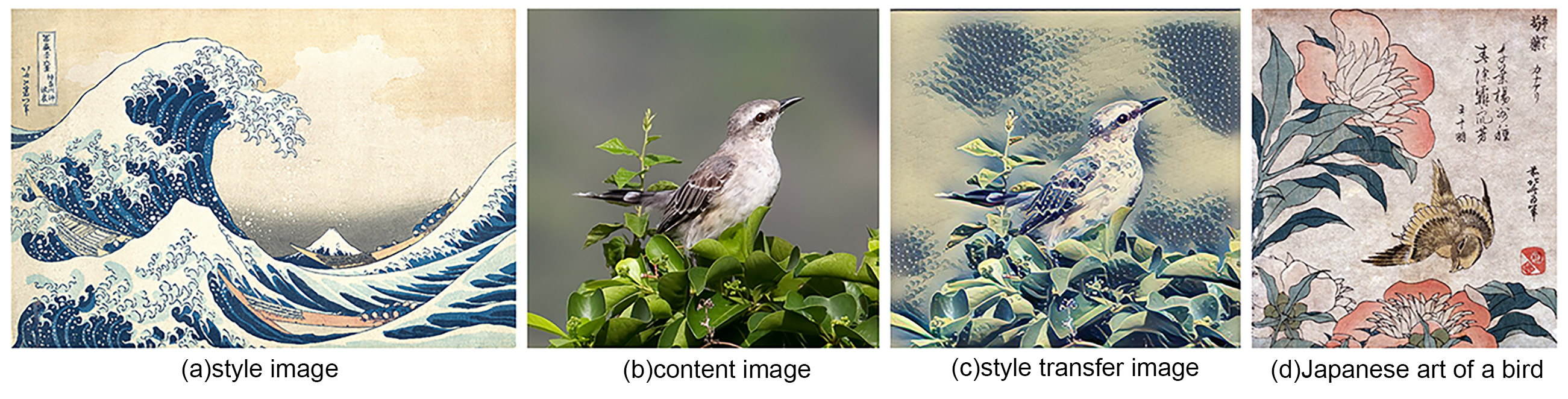

Imposing a style on an image is one of the most laborious tasks in graphic designing. Most of the time, this process is handled by a skillful graphic designer, and it will take hours to finish one image with good quality. Using a neural style transfer (NST) model like is not popular among the computer graphics community due to several reasons. Since a model is specialized for the trained style, a simple application that supports several style transfers would be significant in terms of storage, considering the size of one NST model. Furthermore, the NST model imposing alias artifacts on the input image makes the model less reliable. Our first goal in this paper is to develop a NST model that supports more than one style to transfer. The second goal is to introduce a reliable NST model that imposes only general features related to a style.

Key Contributions

- Transition from CNN to cGAN for Image Synthesis

- Addressing Limitations of CNN-based NST Models

- Mapping Network for Style Learning

- Dual Discriminators for Content and Style Adversarial Loss

- Influence from Latent Space Control Literature

Lessons Learned

- Advantages of cGAN over CNN

- Dual Discriminators for Enhanced Control

- model optimization